How to Harvest Facebook updates with Python, MySQL or SQL Server

While most people focus on search.twitter.com (or automated pulls from their API) for data collection regarding the "pulse" on a particular topic or brand - they often overlook the fact that the public Facebook timeline can be a useful source of data as well.

While most people focus on search.twitter.com (or automated pulls from their API) for data collection regarding the "pulse" on a particular topic or brand - they often overlook the fact that the public Facebook timeline can be a useful source of data as well.

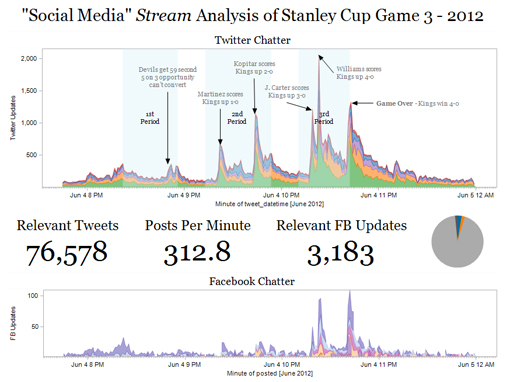

That's how I was able to harvest both twitter and facebook streams during the Stanley Cup games and look for similarities (like, tons).

The BOOK OF FACE!

Here I am going to show you 2 quick and dirty scripts will gather data from the Facebook API and insert it into a database (using MySQL or Microsoft SQL Server).

First, the SQL tables..

(don't fret if your cut and paste skills are shitty, I've got zip files at the bottom of the post)

MySQL

SET time_zone = "+00:00";

--

-- Database: `facebank`

--

-- --------------------------------------------------------

--

-- Table structure for table `fbsearchlog`

--

CREATE TABLE IF NOT EXISTS `fbsearchlog` (

`RunId` INT(11) NOT NULL AUTO_INCREMENT,

`BatchId` INT(11) DEFAULT NULL,

`RunDate` datetime DEFAULT NULL,

`Keyword` VARCHAR(50) DEFAULT NULL,

`HarvestedThisRun` INT(11) DEFAULT NULL,

`TotalHarvested` INT(11) DEFAULT NULL,

`RunTime` FLOAT DEFAULT NULL,

PRIMARY KEY (`RunId`)

) ENGINE=InnoDB DEFAULT CHARSET=latin1 AUTO_INCREMENT=338 ;

-- --------------------------------------------------------

--

-- Table structure for table `searchbank`

--

CREATE TABLE IF NOT EXISTS `searchbank` (

`id` INT(11) NOT NULL AUTO_INCREMENT,

`run_id` INT(11) DEFAULT NULL,

`fid` VARCHAR(250) DEFAULT NULL,

`from_name` VARCHAR(250) DEFAULT NULL,

`from_id` BIGINT(20) DEFAULT NULL,

`keyword` VARCHAR(50) DEFAULT NULL,

`type` VARCHAR(50) DEFAULT NULL,

`posted` datetime DEFAULT NULL,

`message` VARCHAR(5000) DEFAULT NULL,

`story` VARCHAR(5000) DEFAULT NULL,

`link` VARCHAR(1500) DEFAULT NULL,

`likes` INT(11) DEFAULT NULL,

`comments` INT(11) DEFAULT NULL,

`shares` INT(11) DEFAULT NULL,

`harvested` datetime DEFAULT NULL,

`page_likes` INT(11) DEFAULT NULL,

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=latin1 AUTO_INCREMENT=12255 ;

-- --------------------------------------------------------

--

-- Table structure for table `searches`

--

CREATE TABLE IF NOT EXISTS `searches` (

`id` INT(11) NOT NULL AUTO_INCREMENT,

`run_id` INT(11) DEFAULT NULL,

`fid` VARCHAR(250) DEFAULT NULL,

`from_name` VARCHAR(250) DEFAULT NULL,

`from_id` BIGINT(20) DEFAULT NULL,

`keyword` VARCHAR(50) DEFAULT NULL,

`type` VARCHAR(50) DEFAULT NULL,

`posted` datetime DEFAULT NULL,

`message` VARCHAR(5000) DEFAULT NULL,

`story` VARCHAR(5000) DEFAULT NULL,

`link` VARCHAR(1500) DEFAULT NULL,

`likes` INT(11) DEFAULT NULL,

`comments` INT(11) DEFAULT NULL,

`shares` INT(11) DEFAULT NULL,

`harvested` datetime DEFAULT NULL,

`page_likes` INT(11) DEFAULT NULL,

PRIMARY KEY (`id`)

) ENGINE=MEMORY DEFAULT CHARSET=latin1 AUTO_INCREMENT=1 ;

Note that one table "searches" is a MEMORY table - that's because its just used as a temp table to avoid dupes. We don't need to hold on to that data, and in-memory tables are hella fast.

SQL Server

In this case 2008R2, but any semi-recent version will work (besides SQL Server 2008 Express is a great database, and is freaking free).

GO

/****** Object: Table [dbo].[fbsearchLog] Script Date: 07/05/2012 14:22:27 ******/

SET ANSI_NULLS ON

GO

SET QUOTED_IDENTIFIER ON

GO

SET ANSI_PADDING ON

GO

CREATE TABLE [dbo].[fbsearchLog](

[RunId] [INT] IDENTITY(1,1) NOT NULL,

[BatchId] [INT] NULL,

[RunDate] [datetime] NULL,

[Keyword] [VARCHAR](50) NULL,

[HarvestedThisRun] [INT] NULL,

[TotalHarvested] [INT] NULL,

[RunTime] [FLOAT] NULL,

CONSTRAINT [PK_fbsearchLog] PRIMARY KEY CLUSTERED

(

[RunId] ASC

)WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON) ON [PRIMARY]

) ON [PRIMARY]

GO

SET ANSI_PADDING OFF

GO

USE [fbOpenGraph]

GO

/****** Object: Table [dbo].[SearchBank] Script Date: 07/05/2012 14:22:39 ******/

SET ANSI_NULLS ON

GO

SET QUOTED_IDENTIFIER ON

GO

SET ANSI_PADDING ON

GO

CREATE TABLE [dbo].[SearchBank](

[id] [INT] IDENTITY(1,1) NOT NULL,

[run_id] [INT] NULL,

[fid] [VARCHAR](250) NULL,

[from_name] [VARCHAR](250) NULL,

[from_id] [BIGINT] NULL,

[keyword] [VARCHAR](50) NULL,

[TYPE] [VARCHAR](50) NULL,

[posted] [datetime] NULL,

[message] [VARCHAR](MAX) NULL,

[story] [VARCHAR](MAX) NULL,

[link] [VARCHAR](1500) NULL,

[likes] [INT] NULL,

[comments] [INT] NULL,

[shares] [INT] NULL,

[harvested] [datetime] NULL,

[page_likes] [INT] NULL,

CONSTRAINT [PK_SearchBank] PRIMARY KEY CLUSTERED

(

[id] ASC

)WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON) ON [PRIMARY]

) ON [PRIMARY]

GO

SET ANSI_PADDING OFF

GO

USE [fbOpenGraph]

GO

/****** Object: Table [dbo].[Searches] Script Date: 07/05/2012 14:22:48 ******/

SET ANSI_NULLS ON

GO

SET QUOTED_IDENTIFIER ON

GO

SET ANSI_PADDING ON

GO

CREATE TABLE [dbo].[Searches](

[id] [INT] IDENTITY(1,1) NOT NULL,

[run_id] [INT] NULL,

[fid] [VARCHAR](250) NULL,

[from_name] [VARCHAR](250) NULL,

[from_id] [BIGINT] NULL,

[keyword] [VARCHAR](50) NULL,

[TYPE] [VARCHAR](50) NULL,

[posted] [datetime] NULL,

[message] [VARCHAR](MAX) NULL,

[story] [VARCHAR](MAX) NULL,

[link] [VARCHAR](1500) NULL,

[likes] [INT] NULL,

[comments] [INT] NULL,

[shares] [INT] NULL,

[harvested] [datetime] NULL,

[page_likes] [INT] NULL,

CONSTRAINT [PK_Searches] PRIMARY KEY CLUSTERED

(

[id] ASC

)WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON) ON [PRIMARY]

) ON [PRIMARY]

Okay - now that our data structure is in place - let's get some Pythonin' goin' onin'!

First the MYSQL version

(will work on Windows and Linux)

Note: you're going to need to following Python (2.7 32-bit recommended) modules to use this bad boy as-is:

- MySQLdb

- colorama (colors are FUN!)

- simplejson

import urllib

from datetime import timedelta

from datetime import date

from time import *

import string, os, sys, subprocess, time

import simplejson as json

from time import time, sleep

startTime = time()

from colorama import init

init() #init colorama (needed on win32, not in *nix)

from colorama import Fore, Back, Style

import MySQLdb

pp = pprint.PrettyPrinter(indent=3)

conn = MySQLdb.connect(host='localhost', user='root', db='facebank', passwd='harvie') #, passwd='fsdfsdfsdf'

conn.autocommit(True)

cur = conn.cursor()

cur.execute("select ifnull((select ifnull(run_id,0) from searchbank order by run_id desc limit 0,1),0)")

run_id = cur.fetchall()[0][0]+1

print 'BatchRun #' + str(run_id)

newones = 100

keywords = ['metallica','james hetfield', 'lars ulrich', 'kirk hammett', 'rob trujillo', 'jason newsted','cliff burton','tsoukalos','ancient aliens','bigfoot','vaynermedia']

# put yer keywords here!

for keyword in keywords:

sleep(1)

nextpage = 'https://graph.facebook.com/search?q="'+str(keyword)+'"&type=post&limit=100'

newones = 100

while newones > 0 and nextpage <> 'NO':

sleep(1)

f = urllib.urlopen(nextpage)

s = f.read()

f.close()

ss = json.loads(s)

#pp.pprint(ss)

try:

for num in range(0,len(ss['data'])):

#print '*************************'

#for majorkey, subdict in ss['data'][num].iteritems(): #'paging' or 'data'?

# print majorkey

# print subdict

# for subkey, value in subdict.iteritems():

# print subkey, value

#print ' '+str(ss['data'][num]['created_time'].encode('ascii', 'ignore'))

createdtime = str(ss['data'][num]['created_time'].encode('ascii', 'ignore'))

#print ' '+str(ss['data'][num]['type'].encode('ascii', 'ignore'))+'!'

fbtype = str(ss['data'][num]['type'].encode('ascii', 'ignore'))

try:

#print ' msg '+str(ss['data'][num]['message'].replace("'","''").replace(';','').encode('ascii', 'ignore'))+'!'

fbmessage = str(ss['data'][num]['message'].replace("'","''").replace(';','').encode('ascii', 'ignore'))

except:

fbmessage = ''

try:

#print ' sty '+str(ss['data'][num]['story'].replace("'","''").replace(';','').encode('ascii', 'ignore'))+'!'

fbstory = str(ss['data'][num]['story'].replace("'","''").replace(';','').encode('ascii', 'ignore'))

except:

fbstory = ''

try:

#print ' '+str(ss['data'][num]['comments']['count'])+'.'

fbcomments = str(ss['data'][num]['comments']['count'])

except:

fbcomments = 0

try:

#print ' '+str(ss['data'][num]['likes']['count'])+'.'

fblikes = str(ss['data'][num]['likes']['count'])

except:

fblikes = 0

try:

#print ' '+str(ss['data'][num]['shares']['count'])+'.'

fbshares = str(ss['data'][num]['shares']['count'])

except:

fbshares = 0

try:

namee = str(ss['data'][num]['from']['name'])

except:

namee = ''

try:

#print ' '+str(ss['data'][num]['link'])+'.'

link = str(ss['data'][num]['link']).replace("'","''")

except:

link = ''

#print '*************************'

#print str(ss['data'][num]['id'])

fid = str(ss['data'][num]['id'])

print str(ss['data'][num]['id']) + ' - ' + namee + ' (' + fbtype + ')'

from_name = namee.replace("'","''").encode('ascii', 'ignore')

#print str(ss['data'][num]['from']['id'])

from_id = str(ss['data'][num]['from']['id'])

query = """insert into searches (fid, from_id, from_name, keyword, type, link, posted, message, story, likes, comments, shares, harvested, run_id)

values ('"""+str(fid)+"""', '"""+str(from_id)+"""', '"""+str(from_name)+"""', '"""+str(keyword)+"""', '"""+str(fbtype)+"""', '"""+str(link)+"""',cast('"""+str(createdtime).replace('+0000','')+"""' as datetime), '"""+str(fbmessage)+"""', '"""+str(fbstory)+"""', '"""+str(fblikes)+"""', '"""+str(fbcomments)+"""', '"""+str(fbshares)+"""', now(), '"""+str(run_id)+"""')"""

#print query

try:

cur.execute(query)

except:

print '#################### SQL ISSUE ###########################'

print query

print '#################### SQL ISSUE ###########################'

#print ' '+str(ss['data'][num]['message'].encode('ascii', 'ignore'))+' '

conn.commit()

#print ss['paging']['next']

print str(len(ss['data'])) + ' = element "data" length'

#sleep(1)

cur.execute("""insert into searchbank (fid, from_id, from_name, keyword, type, link, posted, message, story, likes, comments, shares, harvested, run_id)

select distinct fid, from_id, from_name, keyword, type, link, posted, message, story, likes, comments, shares, harvested, run_id from searches where fid NOT in

(select distinct fid from searchbank)""")

cur.execute("""delete from searches where keyword = '"""+str(keyword)+"""'""")

cur.execute("select ifnull((select ifnull(TotalHarvested,0) from fbsearchlog where keyword = '"+str(keyword)+"' order by rundate desc limit 0,1),0)")

total_harvested = cur.fetchone()[0]

cur.execute("""insert into fbsearchlog (BatchId, keyword, RunDate, HarvestedThisRun, TotalHarvested) values

(

'"""+str(run_id)+"""',

'"""+str(keyword)+"""',

now(),

((select count(*) from searchbank where keyword = '"""+str(keyword)+"""')-"""+str(total_harvested)+"""),

(select count(*) from searchbank where keyword = '"""+str(keyword)+"""')

)""")

conn.commit()

cur.execute("""select ifnull(HarvestedThisRun,TotalHarvested) from fbsearchlog where BatchId = '"""+str(run_id)+"""' and keyword = '"""+str(keyword)+"""' order by RUnDate desc limit 0,1""")

harvienum = cur.fetchone()

newones = harvienum[0]

print Fore.GREEN + Style.BRIGHT + str(keyword) + Style.RESET_ALL

print str(newones) + ' actually kept'

try:

nextpage = ss['paging']['next']

print nextpage

except:

nextpage = 'NO'

print 'No next page avail'

except:

print 'Some kind of API errors - invalid data JSON returned maybe?'

nextpage = 'NO'

newones = 0

print 'ERROR'

print sys.exc_info()[0]

print sys.exc_info()[1]

sleep(2)

#sleep(2)

elapsed = time() - startTime

print "Finished. Total time: " + strftime('%H:%M:%S', gmtime(elapsed))

done_time = strftime('%H:%M:%S', gmtime(elapsed))

Note: the only stuff you SHOULD have to change is the MySQL connection info and your list of keywords (unless you really want to harvest all the Facebook mentions of 'lars ulrich', in which case - knock yourself out!)

Now the SQL Server version

Note: you're going to need to following Python (2.7 32-bit recommended) modules to use this one as-is:

import urllib

from datetime import timedelta

from datetime import date

from time import *

import string, os, sys, subprocess, time

import simplejson as json

#import winsound

from time import clock

import time

clock()

import pymssql

pp = pprint.PrettyPrinter(indent=3)

conn = pymssql.connect(host='localhost:1450', user='sa', password='harvie',database='fbopengraph')

conn.autocommit(True)

cur = conn.cursor()

cur.execute("select isnull((select top 1 isnull(run_id,0) from fbopengraph.dbo.searchbank order by run_id desc),0)")

run_id = cur.fetchall()[0][0]+1

print run_id

newones = 100

keywords = ['bic','metallica','james hetfield', 'lars ulrich', 'kirk hammett', 'rob trujillo', 'jason newsted','cliff burton','garyvee','vaynerchuk','tsoukalos','ancient aliens','bigfoot','vaynermedia','orion']

for keyword in keywords:

time.sleep(1)

nextpage = 'https://graph.facebook.com/search?q="'+str(keyword)+'"&type=post&limit=100'

#homelink = 'https://graph.facebook.com/'+str(fid)

newones = 100

while newones > 0 and nextpage <> 'NO':

time.sleep(2)

f = urllib.urlopen(nextpage)

s = f.read()

f.close()

ss = json.loads(s)

pp.pprint(ss)

try:

for num in range(0,len(ss['data'])):

print '*************************'

for majorkey, subdict in ss['data'][num].iteritems(): #'paging' or 'data'?

print majorkey

#print subdict

#for subkey, value in subdict.iteritems():

# print subkey, value

print ' '+str(ss['data'][num]['created_time'].encode('ascii', 'ignore'))

createdtime = str(ss['data'][num]['created_time'].encode('ascii', 'ignore'))

print ' '+str(ss['data'][num]['type'].encode('ascii', 'ignore'))+'!'

fbtype = str(ss['data'][num]['type'].encode('ascii', 'ignore'))

try:

print ' msg '+str(ss['data'][num]['message'].replace("'","''").replace(';','').encode('ascii', 'ignore'))+'!'

fbmessage = str(ss['data'][num]['message'].replace("'","''").replace(';','').encode('ascii', 'ignore'))

except:

fbmessage = ''

try:

print ' sty '+str(ss['data'][num]['story'].replace("'","''").replace(';','').encode('ascii', 'ignore'))+'!'

fbstory = str(ss['data'][num]['story'].replace("'","''").replace(';','').encode('ascii', 'ignore'))

except:

fbstory = ''

try:

print ' '+str(ss['data'][num]['comments']['count'])+'.'

fbcomments = str(ss['data'][num]['comments']['count'])

except:

fbcomments = 0

try:

print ' '+str(ss['data'][num]['likes']['count'])+'.'

fblikes = str(ss['data'][num]['likes']['count'])

except:

fblikes = 0

try:

print ' '+str(ss['data'][num]['shares']['count'])+'.'

fbshares = str(ss['data'][num]['shares']['count'])

except:

fbshares = 0

try:

print ' '+str(ss['data'][num]['link'])+'.'

link = str(ss['data'][num]['link']).replace("'","''")

except:

link = ''

print '*************************'

print str(ss['data'][num]['id'])

fid = str(ss['data'][num]['id'])

print str(ss['data'][num]['from']['name'].replace("'","''").encode('ascii', 'ignore'))

from_name = str(ss['data'][num]['from']['name'].replace("'","''").encode('ascii', 'ignore'))

print str(ss['data'][num]['from']['id'])

from_id = str(ss['data'][num]['from']['id'])

query = """insert into fbopengraph.dbo.searches (fid, from_id, from_name, keyword, type, link, posted, message, story, likes, comments, shares, harvested, run_id)

values ('"""+str(fid)+"""', '"""+str(from_id)+"""', '"""+str(from_name)+"""', '"""+str(keyword)+"""', '"""+str(fbtype)+"""', '"""+str(link)+"""',convert(datetime,cast(left('"""+str(createdtime)+"""',19) as nvarchar(50)),126), '"""+str(fbmessage)+"""', '"""+str(fbstory)+"""', '"""+str(fblikes)+"""', '"""+str(fbcomments)+"""', '"""+str(fbshares)+"""', getdate(), '"""+str(run_id)+"""')"""

print query

cur.execute(query)

#print ' '+str(ss['data'][num]['message'].encode('ascii', 'ignore'))+' '

conn.commit()

#print ss['paging']['next']

print str(len(ss['data'])) + ' = element "data" length'

time.sleep(1)

cur.execute("""insert into searchbank (fid, from_id, from_name, keyword, type, link, posted, message, story, likes, comments, shares, harvested, run_id)

select distinct fid, from_id, from_name, keyword, type, link, posted, message, story, likes, comments, shares, harvested, run_id from searches where fid NOT in

(select distinct fid from searchbank)""")

cur.execute("""delete from searches where keyword = '"""+str(keyword)+"""'""")

cur.execute("""insert into fbsearchlog (BatchId, keyword, RunDate, HarvestedThisRun, TotalHarvested) values

(

'"""+str(run_id)+"""',

'"""+str(keyword)+"""',

getdate(),

((select count(*) from searchbank where keyword = '"""+str(keyword)+"""')-(select top 1 isnull(TotalHarvested,0) from fbsearchlog where keyword = '"""+str(keyword)+"""' order by RunDate desc)),

(select count(*) from searchbank where keyword = '"""+str(keyword)+"""')

)""")

conn.commit()

cur.execute("""select top 1 isnull(HarvestedThisRun,TotalHarvested) from fbopengraph.dbo.fbsearchlog where BatchId = '"""+str(run_id)+"""' and keyword = '"""+str(keyword)+"""' order by RUnDate desc""")

harvienum = cur.fetchone()

newones = harvienum[0]

print str(keyword)

print str(newones) + ' actually kept'

try:

nextpage = ss['paging']['next']

print nextpage

except:

nextpage = 'NO'

print 'No next page avail'

except:

print 'Some kind of API errors - invalid data JSON returned maybe?'

nextpage = 'NO'

newones = 0

print 'ERROR'

print sys.exc_info()[0]

print sys.exc_info()[1]

#winsound.PlaySound("*", winsound.SND_ALIAS)

end_script = clock()

print "Finished: %s minutes" % str(end_script / 60)

Again, you only need to change connection info and keyword list.

Like I said, its quick and dirty - but hopefully it's useful to someone!

When using this script - it will initially attempt to search as far back as possible until it runs out of results or hits an error. Upon running it again, it will look for new results and quit digging when it encounters too many dupes (stuff you already have).

All the results will be in the 'searchbank' table, and counts and stats about the data you pulled will be in the 'fbsearchlog' table