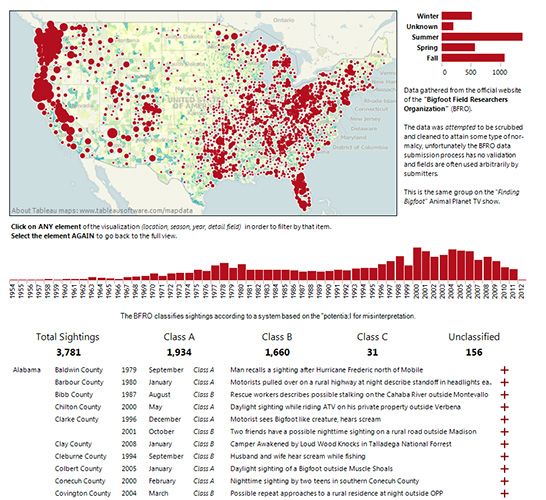

Visualizing Bigfoot of the United States

A fun little Viz I did about Bigfoot sightings.

I guess you could file this under "Too Much Time on my Hands", but hey, people said that about my Metallica shit too, and that was pure genius. :)

Basically, I scraped all the sighting info off of The Bigfoot Field Research Organization site with Python and the Scrapemark module - inserted it into a local SQL Server database, cleaned it up (good lord, what a f*cking mess of data in some places) and threw up a pretty nice looking Viz using Tableau Public.

I'm pretty happy with it - its quite interactive and hopefully someone finds it fun or interesting.

Not too shabby for a couple hours worth of work that was spawned from sitting on the couch watch a re-run of "Finding Bigfoot" on the Animal Planet - after a few beers - and laughing to myself,

"Hah, it'd be funny to map out some freaking Bigfoot sightings"

On another note - after doing my research and harvesting the info... I wish that they'd re-vamp their site and not only display this "data" in a more visual / analytical way - BUT, more importantly, they should be collecting this data in a better way - validating fields, trying to make inferences and not just allowing anything to fly. Maybe its because I'm a data guy - and a bit of a purist at times, but still. It would be cool.

Oh well.

I shouldn't stress over the data compilation of (possibly) mythical creatures. :)

It is the DEFINITION of 'quick and dirty', so be warned - I take no responsibility for anything.

import time

clock()

import pprint, time

import urllib, os, string, sys

from scrapemark import scrape

import pymssql

mssql_db = pymssql.connect(host='localhost', user='sa', password='#####')

mssql_cursor = mssql_db.cursor()

mssql_cursor.execute("truncate table BigFootData.dbo.Sightings")

mssql_db.commit()

f = urllib.urlopen("http://www.bfro.net/gdb/") ##http://www.bfro.net

html = f.read()

f.close()

feetmaster = scrape("""

{*

<td class="cs"><b><a href="{{ [index].statepage_link }}">{{ [index].statename }}</a></b></td>

*}

""",html)

pp = pprint.PrettyPrinter(indent=4)

#pp.pprint(feetmaster)

for item in feetmaster:

for i in feetmaster[item]:

print 'Now working on',

state_name = i['statename']

print state_name

state_url = 'http://www.bfro.net'+i['statepage_link']

time.sleep(1)

try:

cf = urllib.urlopen(state_url)

except:

time.sleep(30)

cf = urllib.urlopen(state_url)

chtml = cf.read()

cf.close()

feetcounty = scrape("""

{*

<TD class=cs><B><a href="{{ [county].county_link }}">{{ [county].countyname }}</a></B></td>

*}

""",chtml)

for citem in feetcounty:

for c in feetcounty[citem]:

county_name = c['countyname']

county_url = 'http://www.bfro.net/GDB/'+c['county_link']

print ' '+c['countyname']

#print ' '+county_url

#time.sleep(1)

try:

sf = urllib.urlopen(county_url)

except:

time.sleep(30)

sf = urllib.urlopen(county_url)

shtml = sf.read()

sf.close()

feetsighting = scrape("""

{*<span class=reportcaption><B><a href="{{ [sighting].sighting_link }}">{{ [sighting].sighting_date }}</a></b>*}

""",shtml)

#pp.pprint(feetsighting)

for sitem in feetsighting:

for s in feetsighting[sitem]:

sighting_url = 'http://www.bfro.net/GDB/'+s['sighting_link']+'&PrinterFriendly=True'

print ' '+s['sighting_link']

try:

dsf = urllib.urlopen(sighting_url)

except:

time.sleep(30)

dsf = urllib.urlopen(sighting_url)

dshtml = dsf.read()

dsf.close()

dshtml = dshtml.decode("utf-8","ignore")

dshtml = dshtml.replace("'","''").replace(' ',' ')

feetdetailsighting = scrape("""

{* <span class=reportheader>Report # {{ [s].report_number }}</span> <span class=reportclassification>({{ [s].class }})</span> *}

{* <BR> <span class=field>{{ [s].submitted }}</span> <hr size="5" noshade> *}

{* <hr size="5" noshade> <span class=field>{{ [s].title }}</span> <hr size="5" noshade> *}

{* <p><span class=field>YEAR:</span>{{ [s].year }}</p> *}

{* <p><span class=field>SEASON:</span>{{ [s].season }}</p> *}

{* <p><span class=field>MONTH:</span>{{ [s].month }}</p> *}

{* <p><span class=field>DATE:</span>{{ [s].date }}</p> *}

{* <p><span class=field>STATE:</span>{{ [s].state }}</p> *}

{* <p><span class=field>COUNTY:</span>{{ [s].county }}</p> *}

{* <p><span class=field>LOCATION DETAILS:</span>{{ [s].location_details }}</p> *}

{* <p><span class=field>NEAREST TOWN:</span>{{ [s].nearest_town }}</p> *}

{* <p><span class=field>NEAREST ROAD:</span>{{ [s].nearest_road }}</p> *}

{* <p><span class=field>OBSERVED:</span>{{ [s].observed|html }}</p> *}

{* <p><span class=field>ALSO NOTICED:</span>{{ [s].also_noticed }}</p> *}

{* <p><span class=field>OTHER WITNESSES:</span>{{ [s].other_witnesses }}</p> *}

{* <p><span class=field>OTHER STORIES:</span>{{ [s].other_stories }}</p> *}

{* <p><span class=field>TIME AND CONDITIONS:</span>{{ [s].time_and_conditions }}</p> *}

{* <p><span class=field>ENVIRONMENT:</span>{{ [s].environment }}</p> *}

""",dshtml)

#pp.pprint(feetdetailsighting) #

for e in feetdetailsighting:

for t in feetdetailsighting[e]:

#print ' '+t['year']

#print ' '+t['month']

try:

mssql_cursor.execute("""insert into BigFootData.dbo.Sightings

(bfroid, submitted, title, class, [month], [date], [year], season, state, county, locationdetails, nearesttown,

nearestroad, observed, alsonoticed, otherwitnesses, otherstories, timeandconditions, environment, URL)

values

(rtrim(ltrim(%s)), %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s

)""",(t['report_number'], t['submitted'], t['title'], t['class'], t['month'], t['date'], t['year'], t['season'], t['state'], t['county'], t['location_details'], t['nearest_town'], t['nearest_road'], t['observed'], t['also_noticed'], t['other_witnesses'], t['other_stories'], t['time_and_conditions'], t['environment'],sighting_url))

mssql_db.commit()

except:

print 'ERROR'

print sys.exc_info()[0]

print sys.exc_info()[1]

errorz = str(sys.exc_info()[0]) + ' - ' + str(sys.exc_info()[1])

errorz = errorz.replace("'","''")

mssql_cursor.execute("""insert into BigFootData.dbo.Sightings

(state, county, month, season, year, locationdetails, URL)

values (%s,%s,%s,%s,%s,'ERROR """+errorz+"""',%s)""",(t['state'], t['county'], t['month'], t['season'], t['year'],sighting_url))

mssql_db.commit()

end_script = clock()

mins = end_script / 60

print "Finished: %i minutes" % mins

It took about an hour to run, looping through all the pages and sub-pages until you are scraping the main detail page - I DID have some time.sleep(2) commands sprinkled in there to not overwhelm their website, but, as it turns out, their site is so slow it didn't really matter. Check out the Bigfoot Viz and enjoy!